How to Deploy a Lightweight Kubernetes Cluster on Linux

Kubernetes has become the industry standard for managing containers. Yet, setting up a complete Kubernetes environment can be challenging and resource-demanding. That’s where k0s comes in — a compact, single-binary Kubernetes distribution that prioritizes ease of use, security, and low system overhead.

In this guide, you’ll learn how to set up a full Kubernetes cluster using k0s on Linux systems, even with limited hardware. Whether you’re deploying on virtual machines, cloud instances, or physical hardware, k0s streamlines the setup process.

2. Benefits of Using k0s for Kubernetes

Developed by the same team behind k3s, k0s stands out with several distinct advantages:

- ✅ Single executable – One binary, no complex installs.

- ✅ No host-level dependencies – Container runtime is built-in; no need for Docker or containerd.

- ✅ Default security – Worker nodes can run without root privileges.

- ✅ Ready for production – Works well for development, edge environments, and live deployments.

- ✅ Lightweight footprint – Operates efficiently on VMs, bare-metal, or even Raspberry Pi devices.

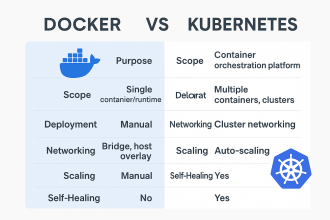

3. Comparing k0s With Other Kubernetes Distributions

| Feature | k0s | k3s | kubeadm | Minikube |

|---|---|---|---|---|

| Binary Count | 1 | ~3+ | Many | Many |

| Resource Usage | Low | Low | Medium | High |

| Rootless Workers | ✅ | ❌ | ❌ | ❌ |

| Secure by Default | ✅ | ❌ | Partial | ❌ |

| Installation Ease | Very Easy | Easy | Medium | Easy |

4. Prerequisites

To follow this tutorial, ensure you have the following setup:

Controller Node Requirements

- 2 vCPUs

- Minimum 2 GB RAM

- 20 GB storage

- Ubuntu 22.04 LTS recommended

Worker Node Requirements

- At least 1 vCPU

- 1 GB RAM or more

- 20 GB disk space

- Ubuntu 22.04 or compatible OS

General Requirements for All Nodes

- SSH enabled

curlandsudoinstalled- Swap disabled (

sudo swapoff -a)

5. Understanding k0s Architecture

k0s is composed of three main elements:

- k0s controller – Manages cluster components like the API server, etcd, and scheduler.

- k0s worker – Runs application containers (root access not required).

- k0sctl (optional) – A command-line tool for cluster automation.

6. How to Install k0s on Linux

Step 1: Download k0s on the Controller

curl -sSLf https://get.k0s.sh | sudo sh

Step 2: Check Installed Version

k0s version

Step 3: Initialize the Controller Node

sudo k0s install controller --single

sudo k0s start

sudo k0s status

Step 4: Confirm Kubernetes Setup

sudo k0s kubectl get nodes

7. Adding Worker Nodes to the Cluster

Step 1: Generate Join Token on Controller

sudo k0s token create --role=worker

Step 2: Install k0s on Worker Node

curl -sSLf https://get.k0s.sh | sudo sh

Step 3: Join Worker to the Cluster

Use the join command from the controller:

sudo k0s install worker --token-file /tmp/token

sudo k0s start

Step 4: Confirm Worker is Active

Back on the controller:

sudo k0s kubectl get nodes

8. Deploying Your First App (Nginx)

Create a Deployment YAML

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

Apply the Deployment

sudo k0s kubectl apply -f nginx-deploy.yaml

Check Pod Status

sudo k0s kubectl get pods

Expose the App via NodePort

sudo k0s kubectl expose deployment nginx --type=NodePort --port=80

sudo k0s kubectl get svc

Visit: http://<worker-node-ip>:<node-port>

9. Managing the Cluster

Export kubeconfig

sudo k0s kubeconfig admin > ~/.kube/config

Cluster Information

sudo k0s kubectl cluster-info

10. Monitoring and Logs

Install basic monitoring:

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm install kube-prometheus prometheus-community/kube-prometheus-stack

Use Loki + Promtail for centralized logging.

11. Security Best Practices

- Implement RBAC to control access.

- Enable TLS encryption for the API and etcd.

- Run workers in rootless mode (enabled by default).

- Regularly rotate cluster certificates:

sudo k0s certificate rotate

12. Upgrading k0s

Controller Node

sudo k0s stop

sudo mv /usr/local/bin/k0s /usr/local/bin/k0s.bak

curl -sSLf https://get.k0s.sh | sudo sh

sudo k0s start

Worker Node

sudo k0s stop

curl -sSLf https://get.k0s.sh | sudo sh

sudo k0s start

13. Common Issues & Fixes

| Problem | Solution |

|---|---|

| Worker not listed | Check status/logs on worker node |

| Controller unreachable | Ensure port 6443 is open |

| Pods stuck in Pending | Use kubectl describe pod |

| Expired token | Run k0s token create again |

Check logs:

journalctl -u k0scontroller -f

# For workers:

journalctl -u k0sworker -f

14. Real-World Scenario: Edge Deployment

A retail business deploys 5 Raspberry Pi devices per store:

- One as a controller

- Four as workers

Applications: Nginx + Redis

Monitoring: Central Prometheus setup

Why k0s?

- Minimalist, fast install

- Secure for remote environments

- No external dependencies

- Easily scalable

15. Final Thoughts

k0s delivers a simple yet powerful way to run Kubernetes with less hassle. Whether you’re managing a dev sandbox, deploying to the edge, or scaling in production, k0s provides a smooth, secure experience — all from a single binary.

In just a few commands, you can spin up a complete Kubernetes cluster without the overhead of traditional setups.